Homelab: A prelude

16 Sep 2018Many moons ago at work, I started working on some performance evaluation. Work mainly uses cloud hosting; quite a change from my previous experience at Twitter where we ran a Xe6 scale fleet of our own servers.

Once I got to the point of running production representative workloads, I was honestly shocked at how expensive it was to rent what is in the grand scale of things not much compute from our cloud vendor of choice.

update: @ottbot got me a big boy cluster https://t.co/3pcyNRqAGt

— Reid D. McKenzie (@arrdem) April 10, 2018

I think I chewed through $3-5k in the span of what was less than a two week project, and caught a fair bit from DevOps who weren’t exactly happy with the bill.

At the time @cemerick was working on his own home datacenter for a customer project, and was talking about the Ryzen based compute nodes he was building. So I shopped around some, and came up with my own build.

The essential components:

AMD Ryzen 5 1600 ($160)

AMD Ryzen 5 1600 ($160) </img> Matching motherboard ($110)

</img> Matching motherboard ($110) 16GiB of slightly bargain bin RAM ($170)

16GiB of slightly bargain bin RAM ($170) 32GiB OS install disks ($64)

32GiB OS install disks ($64) 1T data disks ($160)

1T data disks ($160)

More a slim case and a low profile cooler for ballpark $850 per node.

I’ve been an incredibly satisfied DigitalOcean customer for years now. I ran all my web properties there, and some are still there. They’ve consistently delivered more nines than I’m able to measure, and if I really needed to run something I’d think long and hard before hosting it elsewhere.

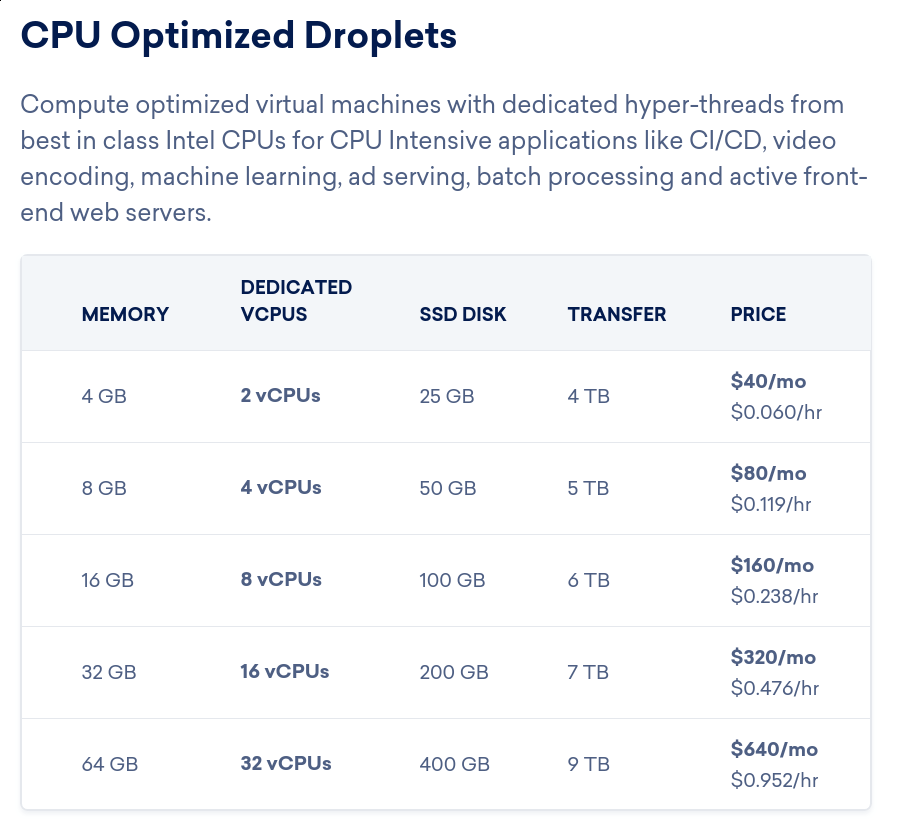

That said, lets look at their pricing

</img>

</img>The node I spec’d out above offers six decent cores, and has 16GiB of actually pretty decent RAM. DO’s price comp would be somewhere between $80/mo(nth) for 4cpus and 8GiB of RAM, and $160/mo for 8cpus and 16GiB of RAM. If we assume that half the price increase is for the RAM since the RAM and CPU I picked out fell in the same ballpark of cost, we’d project that DO would price these nodes somewhere around $120/mo. So if we do the math -

\[\frac{$850}{\frac{$120}{1mo}} = $850 * \frac{1mo}{$120} = 7.0333... mo\]So comparing only capital cost, these nodes break even against rending them from DO in just over a half. Not bad at all, and hardly surprising considering that hosing is a wildly profitable and currently hot business.

Sure, I won’t break even before depreciation wins if I just turn off the $5 DO instance that runs conj.io, and the $15 instance that - at the risk of getting ahead of myself - used to run this fine site, but that’s hardly the point. If I somehow came up with a real workload, or just wanted to play with more than I can squeeze out of my $15 instance, this seems to make pretty good sense.

Aside: @jmpspn AKA Nik asked what the numbers looked like factoring in power draw. Spoiler alert - the homelab project rapidly outgrew its original three node mandate and now includes an APC which at least gives me aggregate power usage. As of this morning, my 450w APC unit was reporting 660s to full discharge while running all my nodes at idle, and the network gear, and my laptop.

Calling the laptop my error bar for some difference in compute workloads, this suggests that my final configuration pulls 0.0824Kwh - A mere $0.20 a day. Updating with this number, there’s $6.54/mo on power I’m spending, which pushes the break even point out to 7.49 mo.

Internet is presumed to be sunk cost, because I was going to have a good home uplink already.

Running bare metal worked fairly well for me at Twitter - the company had invested pretty heavily in automation to make bare metal ownership at least manageable. And frankly I’d taken most of it for granted, because it did pretty much work. How hard could running bare metal yourself really be?

On this basis, I er panicked and bought three (two being the worst number in computing after one). And the parts showed up, got built, and then I realized that, honestly, I didn’t have a plan for what to do with them! Besides vague intents to run a Kafka cluster and maybe some other stuff.

</img>

</img>

</img>

</img>

</img>

</img>

So this is the story of ethos, logos, pathos and my storage array hieroglyph in their apparent conspiracy to ensure that I never achieve more than 99% availability.

^d